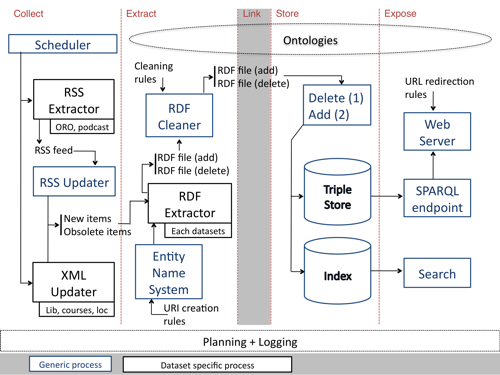

A large part of the technical development of LUCERO will consist of a set of tools to extract RDF from existing OU repositories, load this RDF into a triple store and expose it through the Web. This might sound simple, but the reality is that, in order to achieve this with sources that are constantly changing and that are originally working in isolation requires a workflow which is at the same time efficient, flexible and reusable.

The diagram below gives an initial overview of how such a workflow will look like for the institutional repositories of the Open University considered in the project. It involves a mix of specific components, which implementation require to take into account the particular characteristics of the dataset considered (e.g., an RDF Extractor components depend on the input data), and generic components, which are globally reusable, independently of the dataset. The approach for the deployment of this workflow is that each component, specific or generic, is realised as a REST service. The materialisation of the workflow for a given dataset is then realised by a scheduling programme, calling the appropriate components/services in the appropriate order.

One of the points worth noticing in this diagram is the way updates are handled. A set of (mostly) specific components are in charge of detecting, at regular intervals, what is new, what have been removed and what have been modified from a given dataset. They then generate a list of new items to be extracted into RDF, and a list of obsolete items (either deleted elements of data, or previous versions of updated items). The choice here is to re-create the set of RDF triples corresponding to obsolete items, so that they can be removed from the triple store. This assumes that the RDF extraction process consistently generates the same triples from the same input items over time, but has the advantage of having to keep track of updates only in the early stages of the workflow, making it simpler and more flexible.

Another crucial element concerns the way the different datasets connect to each other. Indeed, the workflow is intended to run independently for each dataset. A phase of linking is planned to be integrated right after RDF extraction (currently left out of the workflow), but this is essentially meant as way to connect local datasets to external ones. Here, we realise the connections between different entities of different local datasets through the use of an Entity Name System (ENS). The role of ENS (inspired by what was done more globally in the Okkam project) is to support the RDF Extractor components in using well-defined, shared URIs for common entities. It implements the rules for generating OU data URIs from particular characteristics of the considered object (e.g., creating the adequate URI for a course using the course code), independently from the dataset where the object is encountered. In practice, implementing such rules and ensuring their use across datasets will remove the barriers between the considered repositories, creating connections based on common objects such as courses, people, places and topics.

Pingback

by First version of data.open.ac.uk « The LUCERO Project

11 Oct 2010 at 14:51

[...] first step, we developed extraction and update mechanisms (see the previous blog post of about the LUCERO workflow) for two important repositories at the Open University: ORO, our publication repository, and [...]

Pingback

by Transforming Legacy Data into RDF – Tools « The LUCERO Project

13 Feb 2012 at 14:42

[...] for extracting data from their original sources into RDF. While many parts of the LUCERO technical workflow are reusable, and the extractors are only a small part of it, it is still quite disappointing that [...]