The data

One of the first data sets to be made available on http://data.open.ac.uk is the contents of ORO (Open Research Online), the Open University’s repository of research publications and other research outputs. The software behind ORO is EPrints, open source software developed at the School of Electronics and Computer Science and is used widely for similar repositories across UK Higher Education (and beyond).

ORO contains a mixture of metadata for items and full text items (often as PDF). The repository includes a mixture of journal articles, conference papers, book chapters andtheses. The data we are taking and presenting on http://data.open.ac.uk is just the metadata records – not any of the full-text items. Typical information for a record includes:

- title

- author/editor(s)

- abstract

- type (e.g. article, book section, conference, thesis)

- date of publication

The process

We had initially expected to extract data from ORO in an XML format (possibly RSS) and transform into RDF. However, Chris Gutteridge, the lead developer for the EPrints, added an RDF export option to version 3.2.1 of EPrints, and since we could get this installed on a test server we decided we would make use of this native RDF support. We did make a few small changes to the data before we published it, mainly to replace some of the URIs assigned by EPrints with data.open.ac.uk URIs as noted the blog post ‘First version of data.open.ac.uk‘.

Issues

In general, the process of publishing the data was quite smooth. However, once we had published the data it quickly became apparent there were some issues with the data. Most notably we found that in some cases two or more individual author details were merged together into a single ‘person’ in the RDF. Investigation showed that the problems were in the source data, and were caused by a number of issues:

Incorrectly allocated author IDs in ORO

ORO allows an (Open University) ID to be recorded for Open University authors, and we use this ID as a way of linking together works by an author. Unfortunately in some cases incorrect IDs had been entered, leading to two separate identities to become con-fused in our data

Name changes

In some cases the author had changed their name, resulting in two names against the same author ID. While all the information is correct, it leads to slightly confusing representation in the RDF (e.g. Alison Ault changed her name to Alison Twiner)

Name variations

In some cases the author uses different versions of their name in different publications. Good practice for ORO is to use the name as printed on the publication, which can result in different versions of the same name – for example in most papers, Maria Velsco-Garcia’s name is spelt with a single ’s’ in Velasco, but in one paper, it is spelt Velassco with a double ’s’.

A particularly common inconsistency was around the use of accents on characters – where sometimes a plain character was used instead of the accented character – this seemed to be down to a mixture of data entry errors and variations in the use of accents in publications

Incorrect publisher data

There were a couple of examples where the publisher had incorrect data in their systems, which had been brought through into ORO. One particular example split a single author with several parts to their name into two separate authors.

Having identified the records effected, the next challenge was correcting them – firstly investigation into each error (this could be challenging – especially where name changes had occurred it was sometimes difficult to know if this was the same person or not), secondly the question of where these are corrected. In this case we were given edit access to ORO so we could make the corrections directly, but the question does arise – what happens if you can’t get the errors corrected in the source data set?

Conclusions

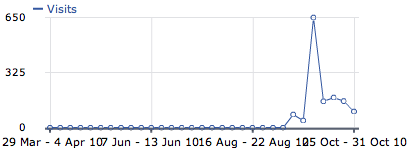

One of the interesting things for me is that these small errors in data would be unlikely to be spotted easily in ORO. For example, when you browse papers by author in ORO, behind the scenes, ORO uses the author ID while presenting the user with the names associated with that ID. Because of this, you would be hard pushed to notice a single instance of a mis-assigned identifier. However, once the data was expressed as RDF triples, the problem became immediately apparent. This means that a very low error rate in ORO data, is magnified into obvious errors on http://data.open.ac.uk

I suspect that this ‘magnification’ of errors will lead to some debate over the urgency of fixing errors. While for http://data.open.ac.uk fixing the data errors becomes important (because they are very obvious), it may be that for the contributing dataset (perhaps especially large datasets of heterogeneous data such as bibliographic data) fixing these errors is of lower priority.

On the upside, using the data on data.open.ac.uk we can start to run queries that will help us clean the data – for example, you can find people with more than 1 family name in ORO.