LUCERO is all about making University wide resources available to everyone in an open, linked data approach. We are building the technical and organisational infrastructure for institutional repositories and research projects to expose their data on the Web, as linked data. It is therefore natural for the interface to this data, the SPARQL endpoint and server addressing URIs in this data to be hosted under http://data.open.ac.uk. The first version of the components underlying this site, as well as a small part of the data which will be ultimately exposed there have gone live last week, with a certain level of excitement from all involved.

What is there? The data

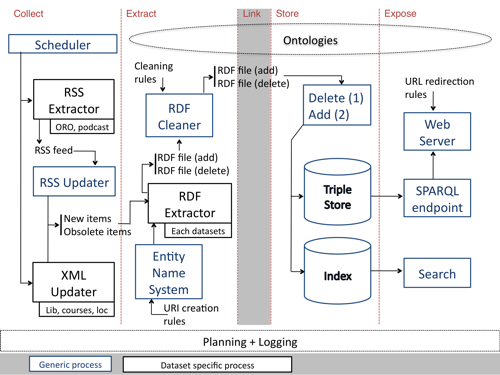

The “launch” of data.open.ac.uk happened relatively shortly after the beginning of the LUCERO project. Indeed, we take the approach that the basic data exposure architecture have to be in place, to incrementally integrate data into it. As a first step, we developed extraction and update mechanisms (see the previous blog post of about the LUCERO workflow) for two important repositories at the Open University: ORO, our publication repository, and podcast, the collection of podcasts produced by the Open University, including the ones being distributed through iTunes U.

ORO data concerns scientific publications with at least one member of the Open University as co-author. The source of the data is a repository based on the EPrints open source publication repository system. EPrints already integrates a function to export information as RDF, using the BIBO ontology. We of course used this function, post-processing what is obtained to obtain a representation consistent with the other (future) datasets in data.open.ac.uk, in particular in terms of URI Scheme. The ORO data represents at the moment 13,283 Articles and 12 Patents, in approximately 340,000 triples (see for example the article “Molecular parameters of post impact cooling in the Boltysh impact structure”).

Podcast data is extracted from the collection of RSS feeds obtained from podcast.open.ac.uk, using a variety of ontologies, including the W3C media ontology and FOAF (see for example the podcast “Great-circle distance”). An interesting element of this dataset is that it provides connections to other types of resources at the Open University, including courses (see for example the course MU120, which is being referred to in a number of podcasts). Podcasts are also classified into categories, using the same topics used to classify courses at the Open University, as well as the iTunesU categories, which we represent in SKOS (see for example the category “Mathematics”).

While representing only a small fraction of the data we will ultimately expose through data.open.ac.uk, the new possibilities obtained by exposing openly these datasets in RDF, with a SPARQL endpoint and resolvable URIs are very exciting already. In a blog post, Tony Hirst has shown some initial examples and encouraged others to share their queries to the Open University’s linked data. Richard Cyganiak has also kindly created a CKAN description of our datasets, for others to find and exploit.

The technical aspects

In a previous blog post, we gave an overview of the technical workflow by which data from the original sources would end up being exposed as linked data. The current platform implements parts of this workflow, including updaters and extractors for the two considered datasets. At the centre of the platform is the triple store. After trying several options, including Sesame, Jena TDB and 4Store, we settled for SwiftOWLIM, which is free, scalable and efficient, and includes limited reasoning capabilities, which might end up being useful in the future.

The current platform also implements the mechanisms by which URIs in the http://data.open.ac.uk namespaces are being resolves. Very simply, a URI such as http://data.open.ac.uk/course/a330 can either be re-directed to http://data.open.ac.uk/page/course/a330 or to http://data.open.ac.uk/resource/course/a330 depending on the content being requested by the client. http://data.open.ac.uk/page/course/a330 shows a browsable webpage linking the considered resource to related one, while http://data.open.ac.uk/resource/course/a330 provides the RDF representation of this resource.

A SPARQL endpoint is also available, which allows to query the whole set of data, or individual datasets through their namespaces, http://data.open.ac.uk/context/oro and http://data.open.ac.uk/context/podcast.

What’s next?

Of course, this first version of data.open.ac.uk is only the beginning of the story. We are currently actively looking at the way to represent and extract information about courses and qualifications from the Study At the OU website, as well as at information about places in the OU campus and regional centres (building, car parks, etc.)

More ways to access will also be soon made available, including faceted search/browsing, and links to external datasets are being investigated. All this is going to be gradually integrated into the platform while the existing data is being constantly updated.

Dr. Mathieu d’Aquin (project director) is a researcher at KMi. He obtained a PhD from the University of Nancy, France, where he worked on real-life applications of semantic technologies to knowledge management and decision support in the medical domain. As a member of the EU Integrated Project NeOn, Mathieu has researched large-scale infrastructures for the discovery, indexing and exploitation of semantic data (e.g. the Watson Semantic Web search engine, Cupboard system for managing semantic information spaces), as well as in numerous research prototypes in concrete applications of these developments.

Dr. Mathieu d’Aquin (project director) is a researcher at KMi. He obtained a PhD from the University of Nancy, France, where he worked on real-life applications of semantic technologies to knowledge management and decision support in the medical domain. As a member of the EU Integrated Project NeOn, Mathieu has researched large-scale infrastructures for the discovery, indexing and exploitation of semantic data (e.g. the Watson Semantic Web search engine, Cupboard system for managing semantic information spaces), as well as in numerous research prototypes in concrete applications of these developments. Fouad Zablith is a researcher and PhD candidate at the Knowledge Media Institute of the Open University. Within the LUCERO project, he’s working on modelling and deploying data in various university contexts, published within http://data.open.ac.uk. His research PhD is in the Semantic Web area, focussing on ontology evolution from external domain data, by reusing various sources of background knowledge. Fouad is also the web consultant of the Open Arts Archive project (http://www.openartsarchive.org), responsible of the implementation and maintenance of the website.

Fouad Zablith is a researcher and PhD candidate at the Knowledge Media Institute of the Open University. Within the LUCERO project, he’s working on modelling and deploying data in various university contexts, published within http://data.open.ac.uk. His research PhD is in the Semantic Web area, focussing on ontology evolution from external domain data, by reusing various sources of background knowledge. Fouad is also the web consultant of the Open Arts Archive project (http://www.openartsarchive.org), responsible of the implementation and maintenance of the website. Salman Elahi is a research assistant at KMi. He obtained his Master’s degree in Knowledge Management and Engineering from the University of Edinburgh. Prior to joining KMi, he has been working as a Software Engineer on projects related to the use of semantic technologies to enhance search systems in the domain of Freshwater Sciences. At KMi, he is involved in the Watson and Cupboard projects. He has also started his part-time PhD looking at issues related to identity and personal information management.

Salman Elahi is a research assistant at KMi. He obtained his Master’s degree in Knowledge Management and Engineering from the University of Edinburgh. Prior to joining KMi, he has been working as a Software Engineer on projects related to the use of semantic technologies to enhance search systems in the domain of Freshwater Sciences. At KMi, he is involved in the Watson and Cupboard projects. He has also started his part-time PhD looking at issues related to identity and personal information management. Prof. Enrico Motta is Professor of Knowledge Technologies at KMi and a leading international scientist in the area of Semantic Technologies, with extensive experience of both fundamental and applied research. Over the years, he has authored more than 200 refereed publications and collaborated with a variety of organizations, including Nokia, Rolls-Royce, Fiat, Phillips, and the United Nations, to name just a few, while receiving close to £7M in external research funding.

Prof. Enrico Motta is Professor of Knowledge Technologies at KMi and a leading international scientist in the area of Semantic Technologies, with extensive experience of both fundamental and applied research. Over the years, he has authored more than 200 refereed publications and collaborated with a variety of organizations, including Nokia, Rolls-Royce, Fiat, Phillips, and the United Nations, to name just a few, while receiving close to £7M in external research funding.

Richard Nurse is Digital Libraries Programme Manager at the Open University Library. Richard joined the Open University in 2009 and leads on Digital Library and website initiatives. He has considerable experience of library systems management and extensive experience of managing funded projects from the National Lottery and Wolfson Challenge Fund. Richard has been a key member of the recent collaborative JISC-funded TELSTAR (Technology enhanced learning supporting students to achieve academic rigour) project delivered at the Open University.

Richard Nurse is Digital Libraries Programme Manager at the Open University Library. Richard joined the Open University in 2009 and leads on Digital Library and website initiatives. He has considerable experience of library systems management and extensive experience of managing funded projects from the National Lottery and Wolfson Challenge Fund. Richard has been a key member of the recent collaborative JISC-funded TELSTAR (Technology enhanced learning supporting students to achieve academic rigour) project delivered at the Open University.